Understanding speech in noise

People with age-related hearing-loss commonly experience difficulty in understanding speech in noisy situations, like social gatherings e.g. cocktail parties, café, or restaurants.

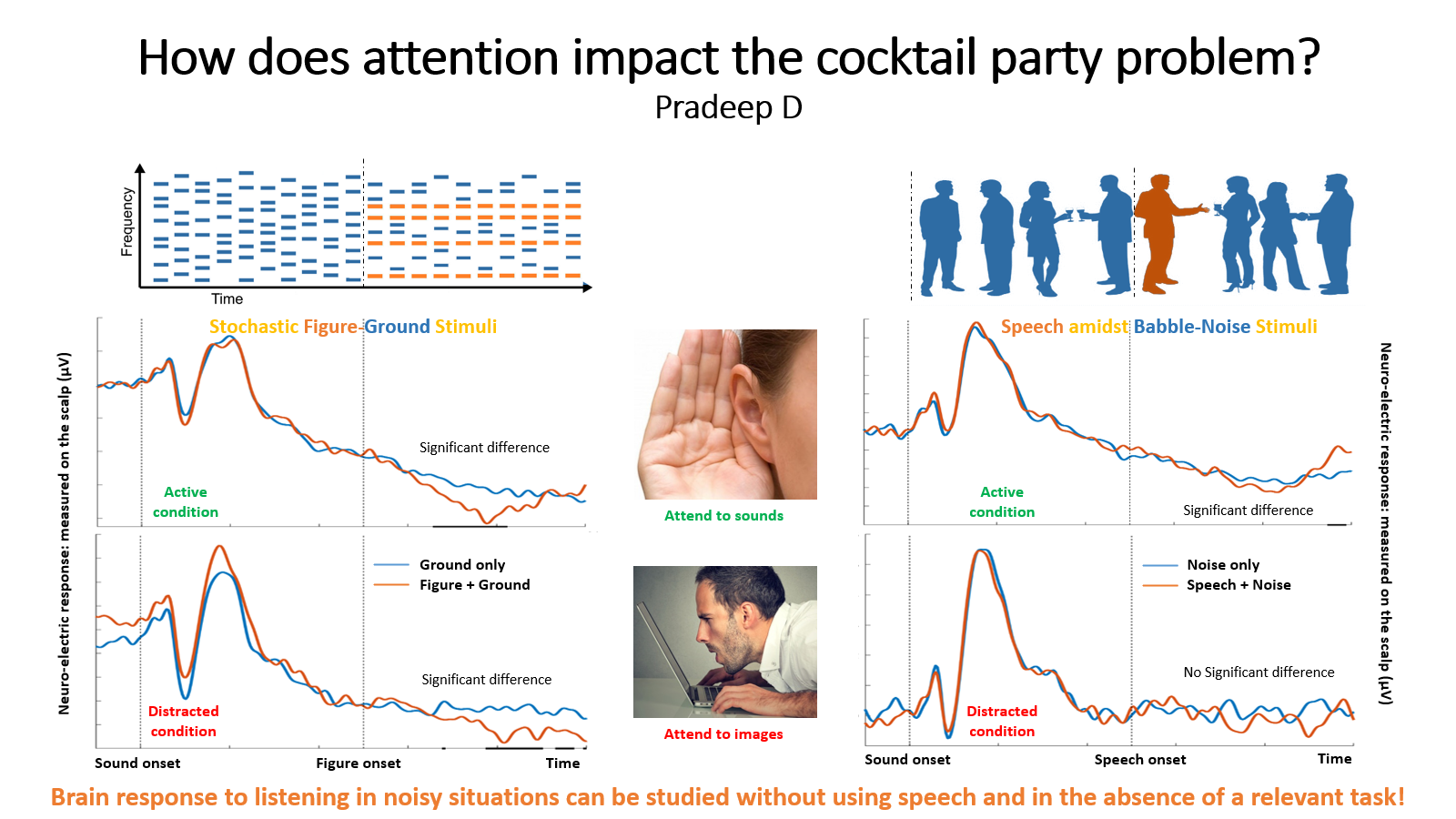

Earlier scientific studies have established that the ability to segregate and group overlapping generic artificial sounds (not words in any language) is related to the ability to understand speech in social chatter.

In my recent study [1], I non-invasively recorded electrical activity from the brain (using EEG technique) while the subjects performed a relevant task (sound segregation and grouping) and irrelevant task (a visual task). I compared the electrical signals evoked from the brain during segregation and grouping of non-linguistics artificial sounds against when trying to understand speech amidst (babble) noise.

I established that the electrical brain activity generated during real-world listening is similar to that of artificial sound segregation done passively without paying attention. Thus brain's response to noisy listening can be studied without using a language even while subjects are not performing a relevant task.

Reference:

[1] X Guo, Pradeep D, et. al., "EEG responses to auditory figure ground perception", Hearing Research, vol. 422, pp. 108524, 2022

[2] Supplementary data from this paper - which has Pure Tone Audiometry (PTA) and performance on separate Behavioural study

Comments

Post a Comment